How to Run DeepSeek-R1 Locally on Your Computer Windows & Mac A Step-by-Step Guide

Author: NaKmo Flow | 2/4/2025

Introduction:

DeepSeek-R1 is an advanced neural network that has gained significant attention for its powerful capabilities and open-source code, which allows users to run it locally on their computers. However, with the massive size of the model, it requires server-level hardware to function properly. But what if you don’t have access to such powerful resources? The answer lies in distilled models — smaller versions of DeepSeek that still maintain much of the original performance while requiring fewer resources.

In this article, we'll show you how to run DeepSeek-R1 on a Microsoft Surface Laptop 3 with an i7 processor and 16GB of RAM. We'll also walk through using two tools — LM Studio and Ollama — to help you set up and run DeepSeek locally.

1. What is DeepSeek-R1 and Why is it So Popular?

DeepSeek-R1 is one of the most powerful neural networks in the world, with 671 billion parameters. It can solve a variety of tasks that require significant computational power. However, the good news is that these large models are becoming more accessible thanks to distilled versions that require fewer resources but still deliver impressive results.

Distilled models come in various sizes: 7, 8, 14, 32, and 70 billion parameters. These models allow you to run DeepSeek on machines that don’t have server-level capabilities. Even a Microsoft Surface Laptop 3 with 16GB RAM and an i7 processor can run smaller versions of DeepSeek effectively.

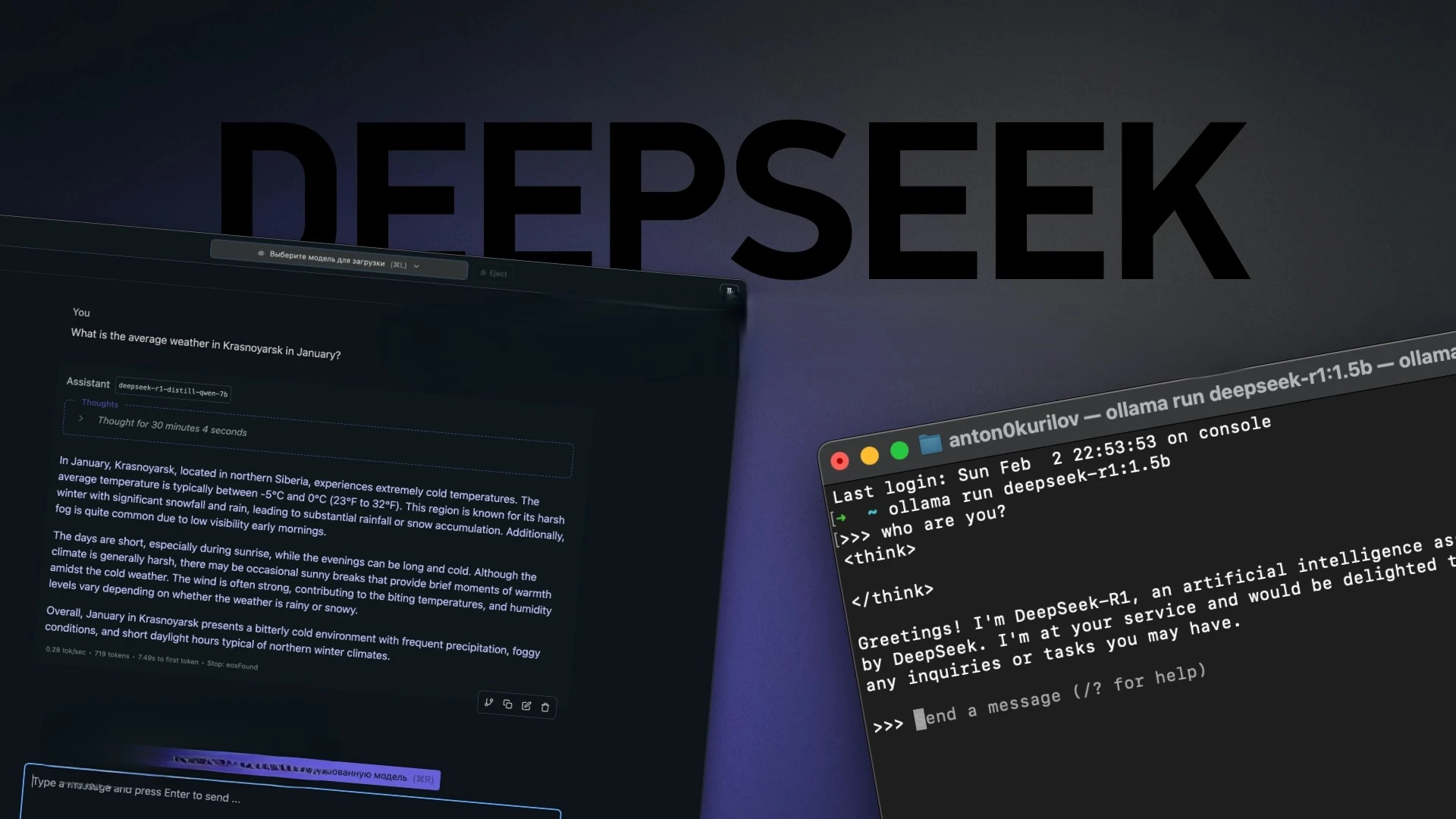

2. How to Set Up DeepSeek Locally Using LM Studio

LM Studio is an easy-to-use tool with a graphical interface, perfect for running various neural networks, including DeepSeek-R1, locally without needing an internet connection. It provides multiple configurations and settings to optimize performance.

Steps to Set Up DeepSeek Using LM Studio:

- Download and install LM Studio on your Windows or macOS computer.

- Launch the program and skip the onboarding process by clicking Skip onboarding.

- In the sidebar, use the search icon and type DeepSeek to find the model.

- Choose the appropriate model (for example, DeepSeek-R1 7B) and click Download. The distilled model is about 4.7GB in size.

- Once downloaded, go to the Chat tab, type your question, and get a response from the model.

If you want to reduce memory usage, turn off the Keep Model in Memory setting. This will slow down the model's response time but will help free up memory for other tasks.

Note: Even with Surface Laptop 3 (16GB RAM), the 7 billion parameters model will be slower, but it’s still usable for basic queries and testing.

3. Running DeepSeek with Ollama: A Minimal Setup

If you prefer working with command-line tools rather than a graphical interface, Ollama is a great alternative. It’s a lightweight tool that allows you to quickly set up and run models locally.

How to Set Up DeepSeek with Ollama:

- Download and install Ollama from the official website.

- Open the Terminal or command-line interface.

- Type the following command to run the 7 billion parameters model:

`bash

ollama run deepseek-r1:7b

`

This command will initiate the model with 7 billion parameters.

- Once the model is downloaded, you’ll be able to type your query directly in the terminal and get a response.

To exit Ollama, simply type /bye. For a list of all available commands, type /?.

4. Limitations to Consider When Running DeepSeek Locally

Running large neural networks locally always comes with some limitations. The larger the model, the higher the hardware requirements.

With a Microsoft Surface Laptop 3, the 7 billion parameters model will run very slowly, but it can still be used for basic queries or testing. For more complex tasks or real-time responses, you may experience significant delays.

If you want faster performance, you can opt for smaller models, such as the 1.5 billion parameters model, though these will give lower quality results.

5. What Factors to Consider When Running DeepSeek Locally:

- Computer Resources: Your Microsoft Surface Laptop 3 has a powerful setup with an i7 processor and 16GB RAM, but running large models like the 671 billion parameters DeepSeek will require a much more powerful system, ideally with 32GB of RAM or more.

- Slower Performance: Even with a Surface Laptop 3, which is relatively powerful, DeepSeek will run slowly, especially for larger models. You'll need to wait several minutes or even longer for responses, depending on the query.

- Answer Quality: The 7 billion parameters model will give answers that are not as precise or refined as the full 671 billion parameters model. However, it can still provide useful information for basic tasks.

Final Thoughts

Running DeepSeek-R1 locally on your computer is a great way to explore its capabilities without relying on an internet connection. LM Studio and Ollama make the setup process easy, and even a Microsoft Surface Laptop 3 with 16GB RAM can handle smaller versions of the model. While the performance may be slower compared to larger, server-based systems, it’s still a fantastic way to experiment with this powerful technology.